Update: This is an archived post. The latest impact factor released in June 2017 for JMIR is an unprecendented 5.175 (2016). At the same time, JMIR mHealth has a stunning inaugural impact factor of 4.636 (2016).

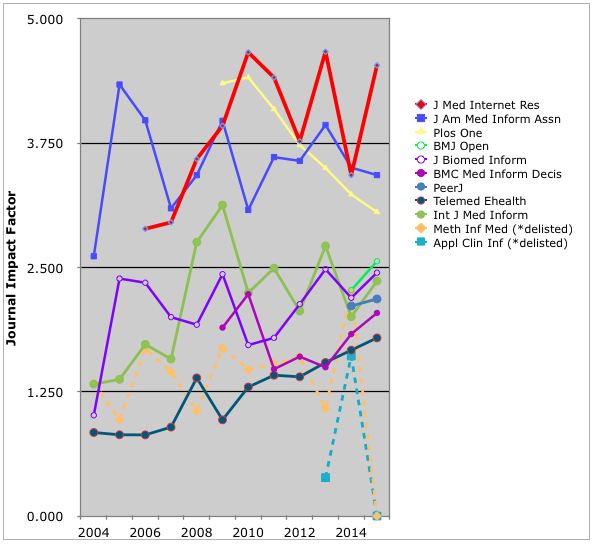

(Toronto/Philadelphia, Jun 14, 2016) Thomson Reuters has published the Journal Citation Reports (JCR) with its Journal Impact Factors for 2015, and we are pleased to see an impact factor increase for JMIR by over a point, from 3.428 (2014) to 4.532 (2015) (5-year impact factor: 5.247), ahead of the Journal of the American Medical Informatics Association (JAMIA), which dropped to 3.428 (and in more bad news for AMIA, the other official AMIA journal ACI was delisted for citation manipulation, see below). The Journal Impact Factor 2015 is defined as the number of citations in 2015 to the citations to articles published in the previous 2 years (2013-2014), divided by the number of articles published during that time. The Journal Impact Factor is a metric of excellence for a journal, it is not an article-level metric.

The Impact Factor is an increasingly controversial metric due its frequent misuse, e.g. administrators comparing the "raw" impact factor score across disciplines, as well as the intransparent procedures and arbitrary and slow journal selection procedures on the part of Thomson Reuters. This disadvantages journals in smaller disciplines such as medical informatics, which traditionally have less citations than for example multidisciplinary or general medicine journals. As one innovation, Thomson Reuters is now ranking journals by quartile (Q1, Q2, Q3, Q4), within their discipline. The impact factor has also been criticized for being subject to manipulation and the recent case of citation stacking / citation cartel leading to the exclusion of 3 medical informatics journals from the impact factor serves as a reminder of that (see below). (As an aside, we are proud of the fact that we are still the #1 MI journal in that category even if journal-level self-citations are excluded)

While we at JMIR discourage obsession over the journal impact factor (in particular if abused as proxy to assess the quality of individual articles), our ranking in the JCR is an important validation that even as small open access publisher we can compete with journals published by publishing giants.

For 10 years now, JMIR is consistently ranked in the first quartile (Q1) in both of it's disciplines, medical informatics (Q1, #2/20, 93rd percentile) and health services research (Q1, #5/87, 94th percentile).

It is the top-ranked (#1) journal by 5-year impact factor for a number of years now.

These rankings are robust and the same for a ranking by Eigenfactor (which takes into account the prestige/impact of the citing journals) or "Impact Factor Without Journal Self-Cites".

JMIR is also the largest medical informatics journal in this category, by citable items.

However, even these category-specific rankings are sometimes of limited use, in particular for multidisciplinary journals such as JMIR, which fit into more than the categories selected by the JCR editors. Moreover, the current JCR categories sometimes lump together journals which do not belong together, for example statistics journals are part of the medical informatics category, and oddly enough, the journal Statistical Methods in Medical Research (which is not a medical informatics journal) remains the top-ranked journal in the medical informatics category. JMIR is ranked #2, behind this statistics journal, with an almost identical impact factor.

We therefore keep reminding our prospective authors that the impact factor should not be the only determining factor when submitting an article. The journal scope and audience (who reads the journal) are equally important if one wants to maximize impact and influence of an article on key stakeholders and researchers, which is not measurable by citations (perhaps better measured with social media uptake and altmetrics).

We continue to encourage our authors to consider the full range of JMIR journals when submitting an article and consider the scope of the journal and the topic of the article.

Newer JMIR sister journals are not officially listed in JCR yet, but their inofficial impact factors (calculated from Web of Science data) look promising, for example

- JMIR mHealth and uHealth: 2.03 (inofficial impact factor)

- JMIR Serious Games: 1.8 (inofficial impact factor)

- i-JMR: 1.5 (inofficial impact factor)

Quiz: Which of the following #openaccess journals has the highest impact factor:

1) PloS One,

2) PeerJ,

3) BMC MDM,

4) BMJ Open,

5) JMIR

(scroll down for the answer - hint: the ranking is exactly the same as last year)

| Journal | Quartile (in their category) | Impact Factor 2015 |

| 1. JMIR | Q1, Q1 | 4.532 |

| 2. PloS One | Q1 | 3.057 |

| 3. BMJ Open | Q2 | 2.562 |

| 4. PeerJ | Q1 | 2.183 |

| 5. BMC Med Inform Med Dec Mk | Q2 | 2.042 |

Citation Manipulation and Delisting of 3 Medical Informatics Journals

As an interesting side note, this year Thomson Reuters de-listed 26 journals for citation manipulation, and interestingly, 3 of these journals are from the "Medical Informatics" category (which used to have 23 journals, now down to 20). These de-listed journals are all subscription journals which used to have very small impact factor numbers. One is the Journal of Medical Systems, a subscription journal owned by Springer, because 62% of its citations were self-citations (Source: Thomson Reuters). Two journals - Methods of Information in Medicine (MIM) as well as Applied Clinical Informatics (ACI) (both owned by Schattauer) - were de-listed for "citation stacking". In previous cases of citation stacking, editors colluded in the publication of a series of articles designed to boost the rankings of their respective journals. in this case, Thomson Reuters identified ACI as the recipient and MIM as the "donor" of citations, with 39% of all citations to ACI coming from MIM; however, the impact factor padding occured in both directions and also goes back to 2014 (with the impact factor 2014 successfully manipulated), with the ACI editor publishing questionable papers in 2014 and 2015 in Methods citing all or most ACI articles for the impact factor-relevant years, and MIM editors returning the favor by publishing papers in ACI in 2014 and 2015 citing all Methods papers in the previous 2 years. ACI had an initial impact factor of 0.386 in 2013 (with only 32 citations for its 86 papers), which jumped to a self-citation inflated IF of 1.6 in 2014 (in 2014, Thomson Reuters did not take any action). Interestingly, both journals are official journals of the International Medical Informatics Association (IMIA), and ACI is also an official journal of the American Medical Informatics Association (AMIA). It will be interesting to see if and how any of these scholarly organizations will respond to this attempt to manipulate the impact factor of their official publications, in particular as the editor of ACI is also Vice-President of IMIA in charge for publications such as the Yearbook of Medical Informatics, which reviews important work of the past year and gives further opportunities to manipulate the impact factors of journals (as discussed here).

Beyond the Journal Impact Factor

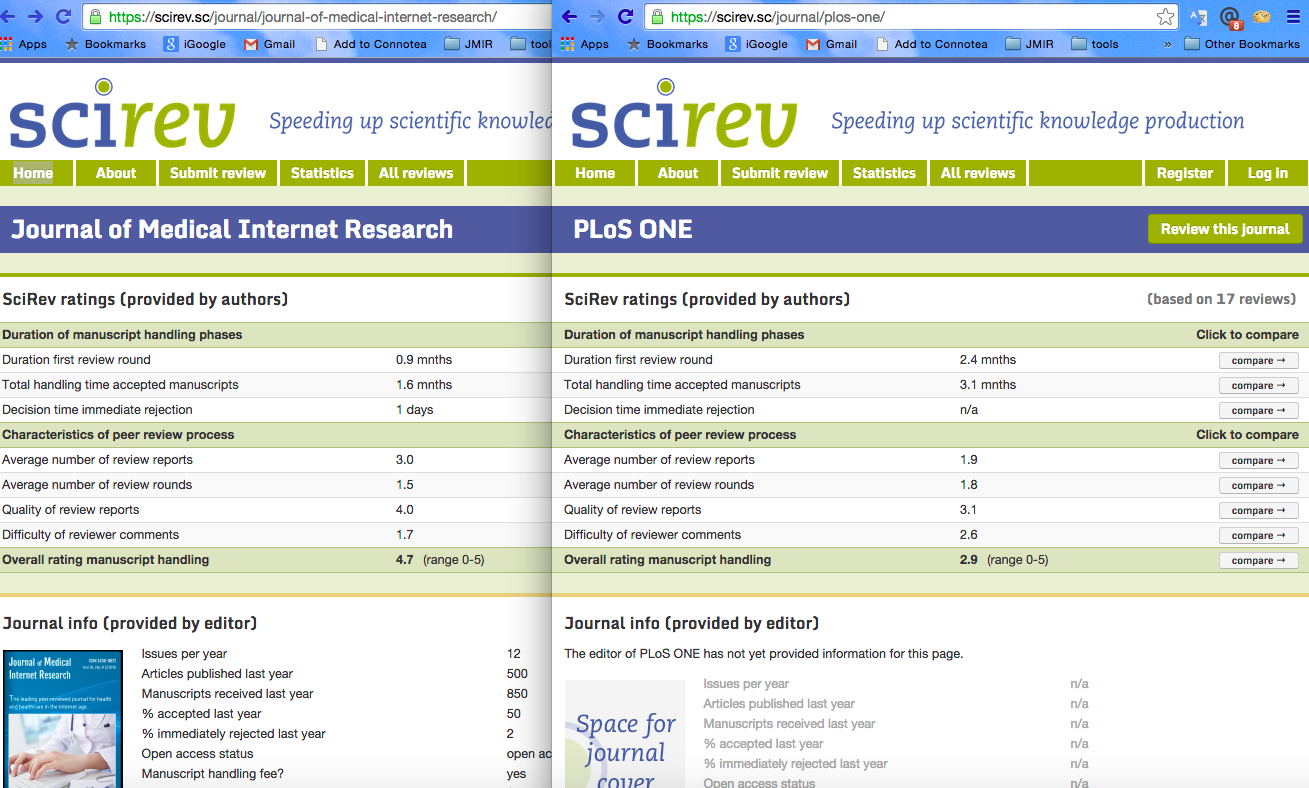

Authors care (and should care) about other metrics/ratings such as author satisfaction with reviews and turnaround times, as for example evaluated by SciRev. JMIR is ranked highly here as well (compare for example against PlosOne ratings).

Other metrics to look at are the twimpact factor (social media impact) as well as post-publication dissemination activies by the publisher (JMIR is using TrendMD to promote published articles across other publishers such as BMJ and the JAMA network).